Segmenting real-world scenes without supervision is a critical, unsolved problem in computer vision. Here, we introduce Spelke Object Inference, an unsupervised method for perceptually grouping objects in static images by predicting which parts of a scene would move as cohesive wholes. (We call these wholes “Spelke objects” after ideas from the well-known cognitive scientist Elizabeth Spelke.)

In principle, it is straightforward to use dynamic signals to learn what is a cohesive object. However, standard neural network architectures lack good grouping primitives that can truly take advantage of Spelke Object Inference. Thus our main contribtion is the design of a neural grouping operator and a differentiable model based on it, the Excitatory-Inhibitory Segment Extraction Network (EISEN). EISEN learns to segment static, real-world scenes from motion training signals, greatly improving the state-of-the-art in this challenging domain.

Spelke Object Inference

Our method is based on a key idea from studies of human development. Spelke and colleagues showed that infants initially group scene elements based on coherent motion, but as they mature begin to use static cues, such as the classical Gestalt properties [1,2]. Infants may be learning static cues that predict which elements move as a unit – a natural case of self-supervision that could inform segmentation algorithms.

However, actual implementations of this process face two challenges. First, most objects are not moving at any given moment, so learning signals appied to static scene elements may be misleading; and second, many objects only move when they are moved by an agent – in which case the object’s and agent’s motion need to be disentangled.

EISEN’s architecture and training procedure are designed to address these challenges.

The Architecture of EISEN

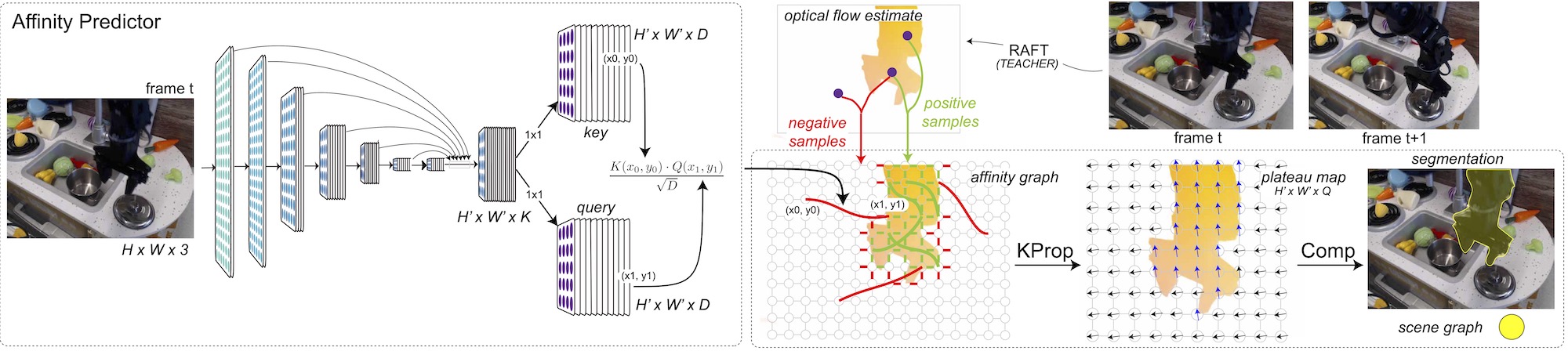

Perceptual grouping is fundamentally a form of relational inference: for each element of a scene, which other elements are part of the same object? EISEN therefore predicts, from a static image, a graph of (mostly local) pairwise affinities that represent physical connectivity between scene elements.

This architectural choice resolves the first challenge above: pairs of elements moving together are trained to have high affinity, pairs moving independently to have low affinity, and pairs of two static scene elements not trained, because there is nothing to learn about their affinity.

A Neural Grouping Primitive: Kaleidoscopic Propagation + Competition

But pairwise affinities are not yet objects – they need to be grouped. EISEN therefore applies a pair of neural grouping modules, Kaleidoscopic Propagation (KProp) and Competition, to extract objects from a scene’s affinity graph.

Kaleidoscopic Propagation (KProp) first randomly initializes a retinotopic array of high-dimensional vector nodes, which represent scene elements. It then recurrently propagates two types of messages on the affinity graph:

- Excitatory messages, which make nodes with high affinity have similar vectors;

- Inhibitory messages, which make nodes with low affinity have orthogonal vectors.

Because physical connectivity is transitive, over many iterations this propagation produces “plateaus” of nodes with nearly the same vector and sharp edges where the scene has an object border.

Competition then picks out well-formed node clusters simply by dropping “object pointers” on this plateau map. Each pointer yields an object segment, corresponding to all the nodes that have roughly the same vector as the one at the pointer’s position. Because some segments may be redundant, several rounds of winner-take-all dynamics deactivate pointers whose territory overlaps with that of another pointer.

Learning to Segment Simulated and Real-world Images

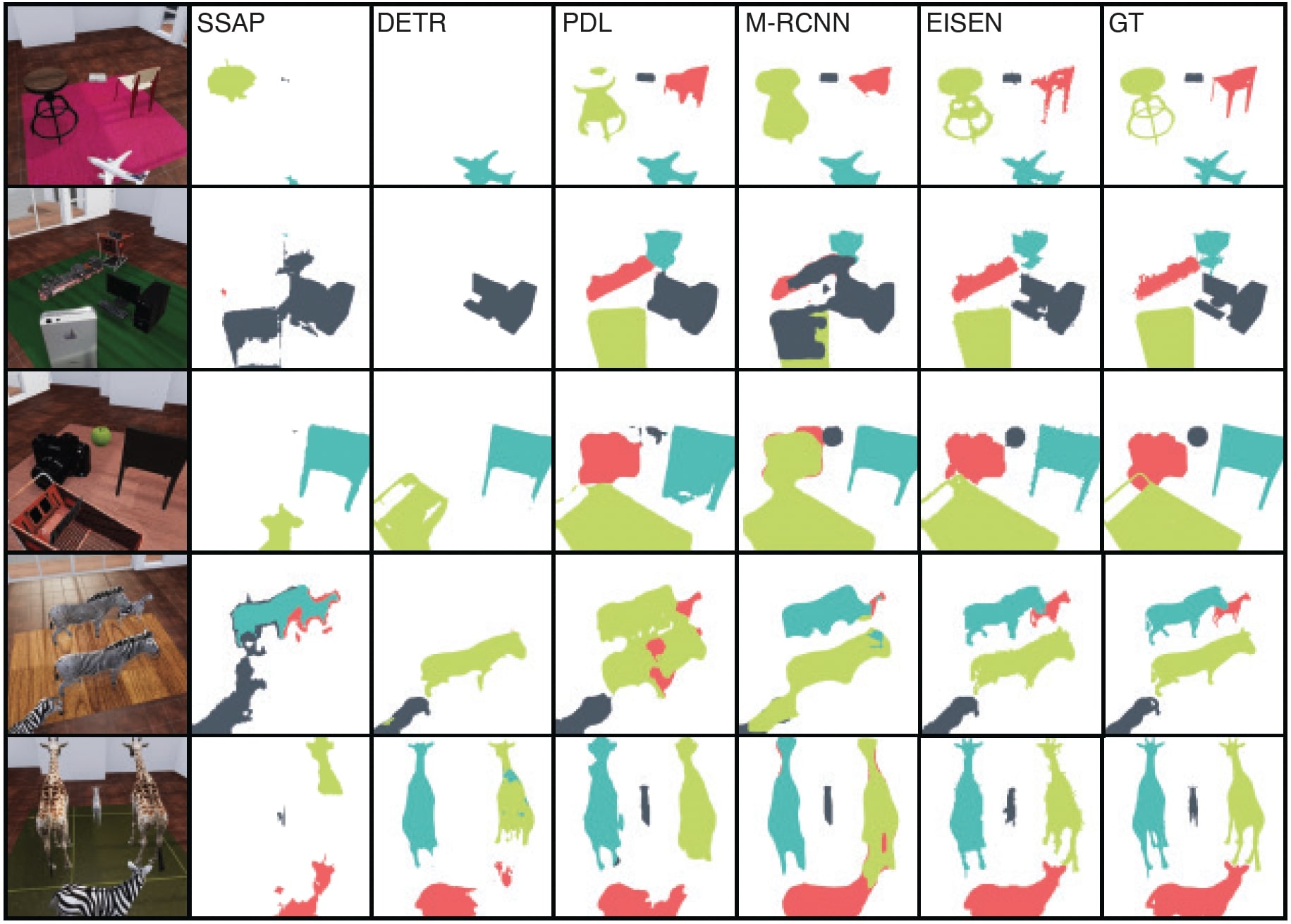

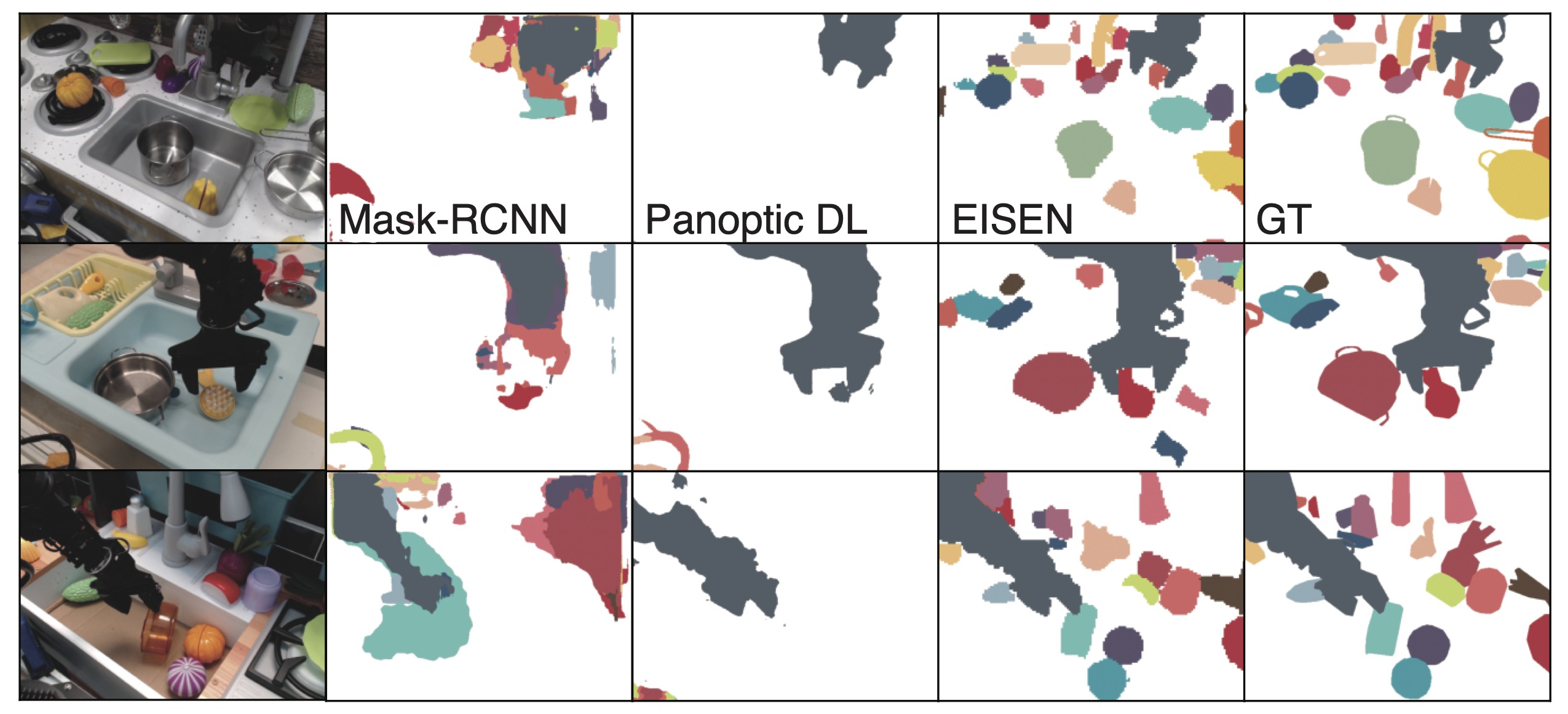

EISEN learns from motion to segment objects in single, static frames from three challenging datasets:

- ThreeDWorld Playroom, with simulated scenes containing multiple complex, realistically rendered objects;

- DAVIS 2016, with real scenes of objects moving against complex backgrounds (evaluated on single frames);

- Bridge Data, a real-world dataset with a human-controlled robot arm interacting with objects in a cluttered “kitchen.”

ThreeDWorld Playroom Dataset

DAVIS: Densely Annotated VIdeo Segmentation 2016

Real-World Robotics Dataset (“Bridge” data)

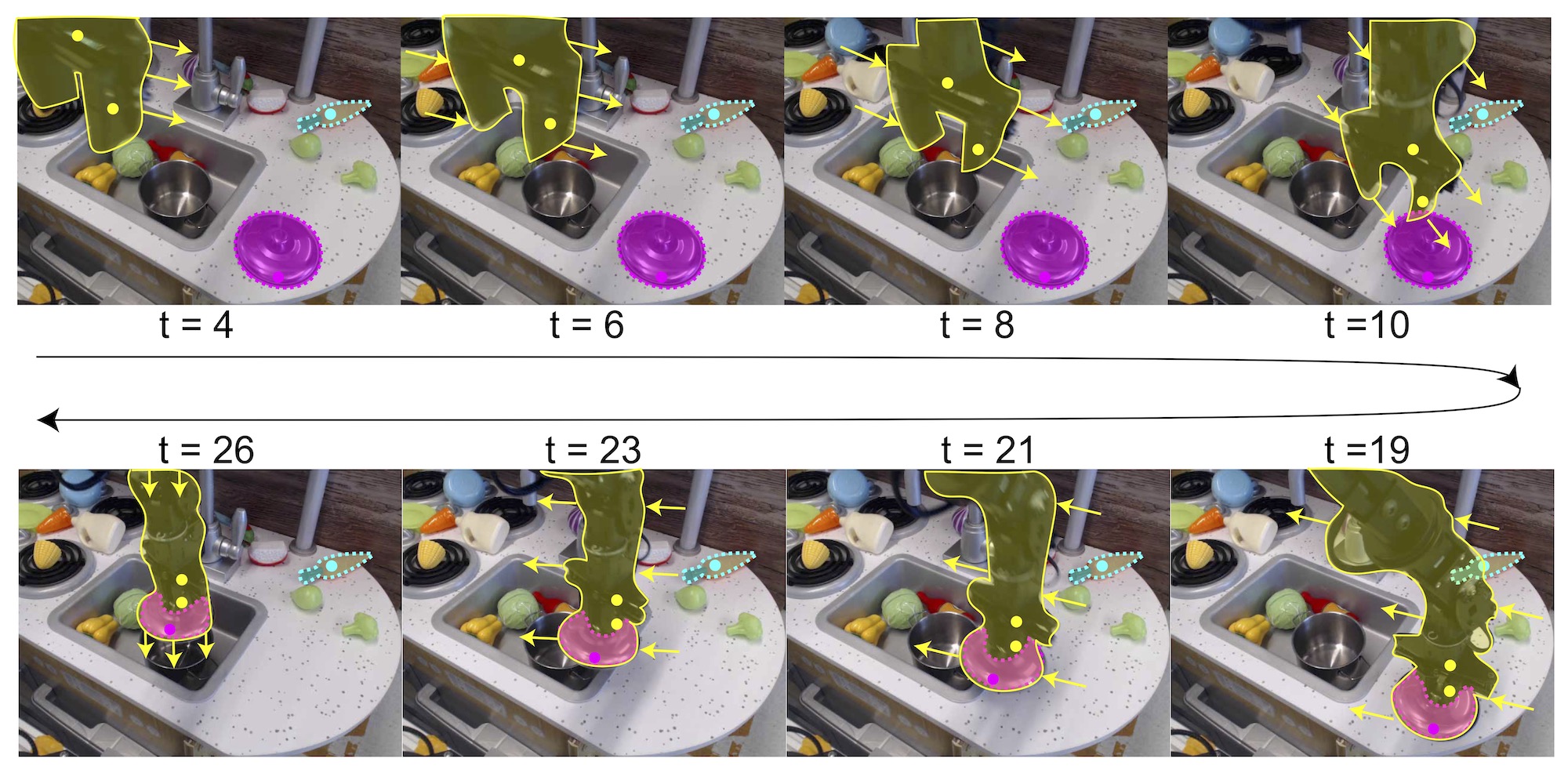

In Bridge, most objects move only when the robot arm moves them. To decouple agent from object motion during training – the second challenge of applying Spelke Object Inference – we introduce a form of top-down inference into EISEN’s learning curriculum. Because EISEN sees many cases of the moving agent (e.g., Bridge’s robot arm) early in training, it naturally learns to segment this first. Thus, later in training, the agent can be “explained away” from raw motion signals, isolating inanimate objects for affinity learning. We implement this as a simple, round-based curriculum in which EISEN automatically learns to segment more and more objects from static cues alone.

Learning general grouping priors. EISEN can accurately segment static Spelke objects in the Playroom and DAVIS2016 datasets that it has not seen at all, let alone seen moving, during training. It may do this by capturing, in its pairwise affinities, fairly generic aspects of real-world “objectness” like spatial proximity and textural similarity. If this is so, EISEN could serve as a mechanistic model of how these static cues come to characterize perceptual grouping in adults, whereas coherent motion predominates in infants [1].

Reference

To cite our work, please use:

@InProceedings{chen2022unsupervised,

author = {Chen, Honglin and Venkatesh, Rahul and Friedman, Yoni and Wu, Jiajun and Tenenbaum, Joshua B and Yamins, Daniel LK and Bear, Daniel M},

title = {Unsupervised Segmentation in Real-World Images via Spelke Object Inference},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

year = {2022}

}

[1] Spelke, E.S., 1990. Principles of object perception. Cognitive science, 14(1), pp.29-56.

[2] Carey, S. and Xu, F., 2001. Infants’ knowledge of objects: Beyond object files and object tracking. Cognition, 80(1-2), pp.179-213.